Emerging Cybersecurity Risks: The Exploitation of Generative Artificial Intelligence (Generative AI)

Security experts have identified the misuse of Generative AI in cybercrime as a significant and growing threat in the security industry. The rapid advancement of Generative AI technology has empowered individuals without specific security expertise to engage in malicious activities, leading to an increase in the sophistication and accessibility of cyber threats. This article explores the nature of Generative AIs and examines various cyber threats exploiting this innovative technology.

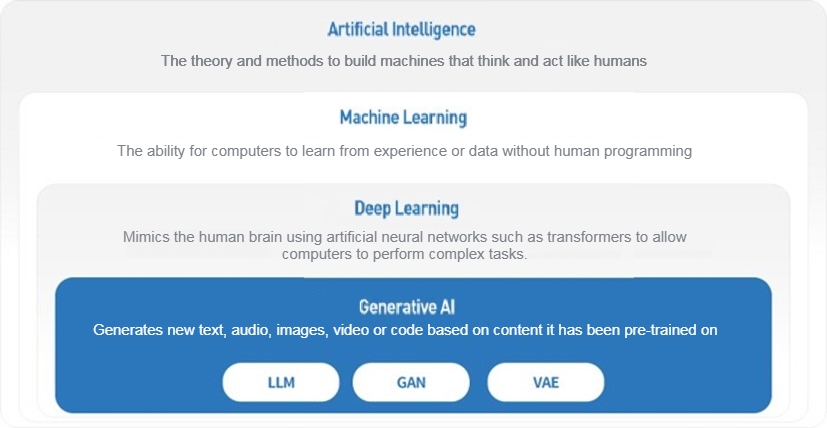

Generative Artificial Intelligence (AI)

Distinguished by its use of algorithms designed to simulate human behavior and cognitive processes, Generative AI goes beyond data processing or analysis. Instead, it generates original outcomes by generating new content or data based on input parameters and the patterns it has learned from previous experiences.

One notable example in the realm of generative AI is ChatGPT, developed by OpenAI. Recognized widely, ChatGPT is a Chat AI service rooted in the Generative Pre-trained Transformer (GPT) model. It excels in natural communication with humans, leveraging an extensive knowledge base and real-world conversation databases. Its conversational prowess spans diverse topics, including information delivery, responses to creative ideas, and solutions to technical problems. What distinguishes ChatGPT is its versatility, surpassing typical AI functions to encompass the creation of various works such as writing articles, composing lyrics, and performing simple coding tasks, in addition to its core chatbot functionalities. This multifaceted capability sets ChatGPT apart within the field of generative AI.

Cybersecurity Risks Exploiting Generative AI

While it’s natural for Generative AI, led by ChatGPT, to emerge as an unparalleled game changer in various industries, the escalating trend of exploiting Generative AI for cybercrime is growing.

#1 Emergence of Cyber Threat ‘WormGPT’

A notable cyber threat exploiting Generative AI is ‘WormGPT’. Functioning as a dark web counterpart to ChatGPT, WormGPT is a cybercrime tool rooted in a large language model (LLM)-based Generative AI, strategically designed for malicious objectives. Its capabilities include learning extensive sets of malware-related and hacking data. When an attacker articulates a desired condition in natural language, WormGPT generates a tailored outcome, making it a potent tool in cybercriminal activities.

> related news : WormGPT: AI tool designed to help cybercriminals will let hackers develop attacks on large scale, experts warn (SKY News)

#2 Elevated Threat of Sophisticated Phishing Attacks

The sophistication of phishing attacks has reached unprecedented levels with Generative AIs overcoming linguistic limitations inherent in pre-existing AI-generated data, including grammar errors and contextual deficiencies. Business Email Compromise (BEC) exemplifies this cybercrime trend, where attackers manipulate victims into transferring funds or exposing confidential information through deceptive emails. Notably, advanced tools like WormGPT contribute to the refinement of phishing messages, intensifying the effectiveness of BECs. In the United States alone, the FBI reports over 20,000 BECs, highlighting the severity of the issue, a concern that resonates globally and particularly in Korea.

Beyond conventional phishing, the exploitation of deep voice technology poses a growing threat. Advancements in text-to-speech (TTS) technology now allow attackers to engage in voice phishing crimes by obtaining a sample of the target’s voice. Deep voice programs utilizing Generative AIs have gained widespread popularity, available for download from the web. Videos synthesizing celebrity voices through these programs are easily accessible on platforms like YouTube. This rapid progression in deep voice technology underscores the urgency of addressing phishing attacks, not only on a global scale but also within Korea.

> related news : Study shows attackers can use ChatGPT to significantly enhance phishing and BEC scams (CSO)

#3 Increased Accessibility of Nonprofessionals on Cybercrime

The utilization of Generative AI in cybercrime facilitates an automated and expedited process for collecting and analyzing information about the target. This ease of access empowers nonprofessionals to attempt a range of cyberattacks, including voice forgery such as deep voice manipulation, the production of malicious code, and the identification of vulnerabilities.

The introduction of a service on hacking forums, particularly on the dark web, that offers criminal targets and specific methods generated by WormGPT for phishing emails or hacking-related files poses a significant risk. This service not only facilitates but makes it accessible to a wider range of individuals who may not have technical proficiency in security matters.

Preparation for the Abuse of Generative AI

Ian Lim, Field Chief Security Officer(CSO) for the Asia Pacific region at Palo Alto Networks, said, “ The cyber attack, which needed 44 days in the past, took only 5 days last year, and now the time has been shortened enough to take only a few hours,” and underscored “New and variant attacks utilizing Generative AIs like WormGPT are rapidly progressing.”

The various cyber threats that evolve daily can lead to significant losses for businesses, not only in terms of substantial damage recovery costs but also in comprehensive aspects such as the leakage of confidential or sensitive information, loss of trust, and damage to reputation. Therefore, security personnel within a company need to establish security policies tailored to the unique business environment of each company and continuously monitor them to prepare for threats such as malware infections, hacking, and information leaks. In instances where securing security personnel is challenging or when a more systematic security management approach is required, it is crucial to respond quickly and seamlessly to the increasingly diverse cyber threats through the services of professional security companies.

When innovation occurs in IT technology, concerns, and unforeseen side effects often follow. However, it is crucial to prevent the misuse of groundbreaking technology for criminal activities, creating new threats and damages. Penta Security is committed to ongoing research to prevent increasingly sophisticated cyber threats and provide more advanced security solutions. Anticipate a safer cloud security environment built through Penta Security’s Cloudbric service!